With our free press under threat and federal funding for public media gone, your support matters more than ever. Help keep the LAist newsroom strong, become a monthly member or increase your support today.

LAUSD Has A New (And Complicated) Way To Score Schools. We Explain

How should we judge whether a public school is succeeding or struggling academically?

We could look at its standardized test results -- but those scores arguably track poverty levels in a school just as well as they reflect the amount of learning happening.

But the Los Angeles Unified School District just unveiled a new metric that claims to offer a new perspective on school performance with the effects of poverty and other societal stressors filtered out. It's called an "Academic Growth" score.

Advocates hope LAUSD's new growth scores will challenge our assumptions about school performance. Maybe they'll reveal unexpected bright spots: schools with low test scores that are actually helping students make huge strides. Maybe they'll show schools with dynamic reputations are actually stagnant.

Then again, the growth measures may not tell us much at all. Critics say it's not clear LAUSD's model -- identical to the formula behind recently released scores for Fresno, Long Beach and Oakland schools -- is capable of truly measuring what they claim to measure.

So what do these new "Academic Growth" scores mean? And how should we interpret the results? This video and our explainer below break it down.

FIRST, A BASIC DEFINITION: WHAT IS A GROWTH SCORE?

A growth score aims to show two important things simultaneously:

A growth score is a measure of a school's impact on its students, showing how well a school does at helping its students improve their standardized test scores from one year to the next.

A growth score is also a comparison, showing how one school's level of impact ranks against other schools serving kids with 'similar past scores and... demographics.' (For example, poverty levels.)

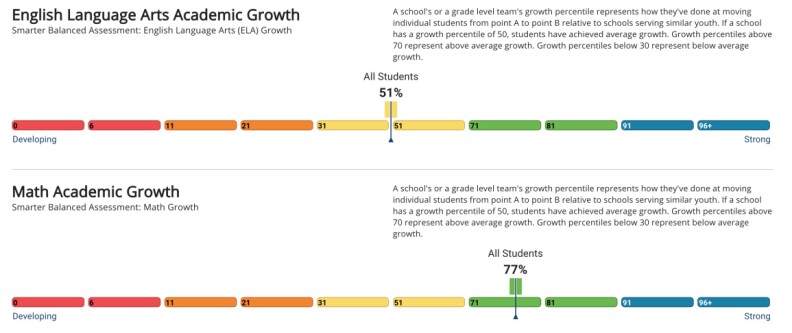

Growth scores range from 0-100 and are expressed as percentiles. That means:

- A school with a growth score of 50 does an average job of helping students improve their test scores, when compared to other schools working with kids from similar backgrounds and circumstances.

- A score of 70 or better represents "above-average growth," according to a breakdown of the scores on the results website.

- A score of 30 or lower represents "below-average growth."

These growth scores are calculated for both reading and math. The model also calculates growth scores for different racial groups and other demographic groups -- like "economically advantaged" kids, within a school.

WHAT GROWTH SCORE DID MY SCHOOL GET?

You can look up the results for L.A. Unified School District campuses by clicking here. Independent charter schools aren't included in the analysis; only "affiliated" charter schools have growth scores.

(Similar growth measures for other districts, including Long Beach Unified and Oakland Unified, have also been published.)

WHY SHOULD I CARE ABOUT THESE SCORES?

Right now, when Californians talk about "test scores," they're mostly discussing the rates at which students are "meeting or exceeding" state standards -- and that's an important bottom-line metric about how our students and our taxpayer-funded schools are doing.

But there's another bottom-line question that test scores can't answer: over the course of a school year, are schools helping students make an appropriate amount of progress?

For example, students with really low test scores last year can make really big strides, but still fall short of the state standards on this year's test. Conversely, high-scoring students who meet standards easily might make very little progress over the course of the year.

"The growth measure is about saying, 'Knowing everything we know, which schools are moving students further, fastest?'" said Noah Bookman, executive director of the CORE Data Collaborative, which created the growth model and calculated the scores on LAUSD's behalf. (CORE is a non-profit organization created by eight California urban school systems, including LAUSD, that wanted to find ways to work together.)

HOW ARE THESE SCORES CALCULATED?

Here, read this 21-page technical report!

Or you could just read this summary:

- Find a student's test scores from last year.

- Compare that student to others who got similar test scores last year. The model uses test score data from a pool of around 2 million students across California to make these comparisons.

- Create a "customized prediction" for how much that student's score should improve. Using last year's scores as a baseline, the model draws on the statewide data to come up with a realistic target. (High schoolers only take the benchmark standardized tests once, so the model uses their eighth grade results as a baseline.)

- Adjust this prediction based on a student's demographic characteristics. The model adjusts the prediction for each student depending on whether they're "economically disadvantaged," an English learner, a foster child, homeless or whether they have a disability. Each prediction is also adjusted to consider the student's learning environment, factoring in the school-wide test scores and other demographic measures. The idea behind these adjustments? A students' growth will only be measured against that of other students who share similar characteristics and are learning in similar circumstances.

- Compare the student's predicted improvement with their actual improvement on this year's standardized test. The difference between the two is supposed to reflect the difference a school made on the student's learning -- a difference that (theoretically) can't be attributed to their test results last year or their poverty level.

- Repeat this prediction process for almost every student in a school -- "almost every" student because some kids who switched schools or who didn't take the test the year before are excluded.

- Within the school, find the average difference between students' actual versus predicted growth. Some students will have exceeded their predicted growth. Some students won't hit their predicted growth target. The average of those differences is supposed to reflect the school's overall impact on all of its students' learning.

- Rank this school's average impact against other schools' results. Stacked into percentiles, this is where a school's growth score comes from.

Clear as mud? We illustrate the process in the video at the top of this story. It's worth a watch.

ARE THESE MEASURES ACCURATE?

At bare minimum, we know that growth measures don't have the same main problem that test scores do: growth scores do not closely track poverty among students. In fact, there appears to be almost no correlation between growth scores and poverty levels.

Sharing FWIW 📊 — Chart 1: every #LAUSD school's test score (in English) vs. low-income enrollment

— Kyle Stokes (@kystokes) December 12, 2019

Chart 2: every LAUSD school's new Academic Growth score vs. low-income enrollment pic.twitter.com/Zv9xYQhbSr

For what it's worth, dozens of states use growth models to measure school performance. In particular, 23 states use a "student growth percentile" model that's highly similar to this new measure LAUSD and the CORE districts are using.

But even though their use is widespread, UC Berkeley economics professor Jesse Rothstein isn't convinced growth models are able to filter out all of the factors that affect student's test scores.

The model may know a student's socioeconomic or disability status, but the model doesn't know whether the student was sick or hungry on test day, hampering their score. The model doesn't know whether a student has involved caretakers -- like a parent who reads to the child often -- or access to after-school tutoring. Without knowing these things, Rothstein wonders how the model can isolate the impact the school is having on its students' growth.

This can lead to confusing results, Rothstein said. He believes different schools could get different growth scores "even if their actual effectiveness is the same."

"The more you do to separate out the piece that's plausibly due to the school rather than due to [the student] ... the less stable the measure is," Rothstein expounded in a follow-up interview. "So you can have a very stable measure that's capturing socioeconomic status, or you can have a measure that's freer of that, but that's less stable. There's not really a way to square that."

But the CORE Data Collaborative says that the results aren't random; most schools' growth scores are consistent year-to-year.

About half of the schools ranking as either "below average," "average," or "above average" in 2016 received the same ranking in the next year's results. Roughly 40 percent shifted one category lower or higher. The remaining 10 percent went from "below-" to "above-average," or vice-versa.

LET'S SAY MY SCHOOL GOT A LOW GROWTH SCORE. HOW CAN THE SCHOOL IMPROVE IT?

LAUSD officials say they are still figuring out how to advise schools who got below-average growth scores how they might improve.

Rothstein worries the answer may be too easy: double-down on test preparation, crowd out non-tested subjects like social studies, art and music.

But CORE's Noah Bookman says growth scores are actually a clearer diagnostic tool for schools trying to improve.

"Simpler is always better unless it's wrong," said Bookman. "If somebody is looking at year-over-year change -- which is an easy thing to explain -- [you might say] 'Last year, 50 percent of your kids were on grade level, this year 60 percent are on grade level, wasn't that a good result?' Well, it could be. It could also just be that the kids changed.

"If you were to misinterpret that as the impact the school is having," Bookman added, "you might come to the wrong conclusion."

IS THIS A 'VALUE-ADDED MODEL'?

Yes and no. Technically, LAUSD's system is called a "student growth percentile" model. Bookman said the model could also rightfully be called a "value-added model." Rothstein said these are two names for effectively the same thing.

While all of this is just jargon to most of us, the term "value-added model" or its acronym "VAM" evokes a bitter debate in education.

Some advocates have pushed to include "value-added" measures in teachers' job performance evaluations. Like the growth model attempts to isolate a school's impact, VAM measures in teacher evaluations attempt to isolate an individual educator's effects on his classroom's learning.

In L.A., distrust of these scores runs especially high. In 2010, the L.A. Times calculated VAM scores for more than 11,000 LAUSD teachers and published the full database, prompting backlash. For this reason, the term "value-added" remains loaded.

WHEN MY CHILD GETS HIS 'CUSTOMIZED PREDICTION,' DOES THE GROWTH MODEL KNOW HOW RICH OR HOW POOR MY CHILD IS? OR WHAT KIND OF DISABILITY HE HAS?

The growth model only knows whether your child is labeled "economically disadvantaged" -- which usually means that a child is receiving either free or reduced-price school meals.

Though there are different qualifying thresholds for receiving either free meals and reduced-price meals, the growth model doesn't get more specific than this binary. Same for homelessness or foster youth status -- a child either has the label or he does not.

But on other demographic markers, the growth model does consider a little more detail. For students in special education, the model differentiates between kids with "mild-to-moderate" and "moderate-to-severe" disabilities.

For English learners, the model adjusts differently for students based on how they scored on the state's language proficiency assessment, the "ELPAC."

IF EVERY KID GETS A 'CUSTOMIZED PREDICTION' BASED, IN PART, ON THEIR PEERS... IS IT POSSIBLE THAT DISADVANTAGED KIDS MIGHT HAVE AN EASIER TIME SHOWING HIGH GROWTH?

If the growth model didn't account for peer effects on a student's test score, schools that are higher income will have slightly higher growth scores, Bookman said in an interview.

But Bookman said that isn't the same as saying the growth model is "advantaging" lower-income schools or "penalizing" higher-income schools. Both higher-income and lower-income schools have equal chances of being high or low growth.

Video produced by Chava Sanchez

At LAist, we believe in journalism without censorship and the right of a free press to speak truth to those in power. Our hard-hitting watchdog reporting on local government, climate, and the ongoing housing and homelessness crisis is trustworthy, independent and freely accessible to everyone thanks to the support of readers like you.

But the game has changed: Congress voted to eliminate funding for public media across the country. Here at LAist that means a loss of $1.7 million in our budget every year. We want to assure you that despite growing threats to free press and free speech, LAist will remain a voice you know and trust. Speaking frankly, the amount of reader support we receive will help determine how strong of a newsroom we are going forward to cover the important news in our community.

We’re asking you to stand up for independent reporting that will not be silenced. With more individuals like you supporting this public service, we can continue to provide essential coverage for Southern Californians that you can’t find anywhere else. Become a monthly member today to help sustain this mission.

Thank you for your generous support and belief in the value of independent news.

-

Heavy rain from the early-season storm could trigger debris flows. Snow is also possible above 7,000 feet.

-

Jet Propulsion Laboratory leadership announces that 11% of the workforce is being cut.

-

The rock legend joins LAist for a lookback on his career — and the next chapter of his music.

-

Yes, it's controversial, but let me explain.

-

What do stairs have to do with California’s housing crisis? More than you might think, says this Culver City councilmember.

-

Doctors say administrator directives allow immigration agents to interfere in medical decisions and compromise medical care.